Study: Why troposphere warming differs between models and satellite data

Zeke Hausfather

06.21.17Zeke Hausfather

21.06.2017 | 4:44pmThe most common measure of global temperature rise is here on the Earth’s surface, but scientists also gather data on how temperatures in the atmosphere high above us are changing.

Of particular interest is the troposphere – the lowest layer of the atmosphere where almost all of our weather occurs. To track temperatures, scientists use satellites, which have been providing data since they were first launched in the late 1970s.

Since around the start of the 21st century, the tropospheric warming recorded by satellites has been slower than the rate projected by climate models. In a new study, published in Nature Geoscience, researchers find that these differences are outside the range of what we would expect from natural variability.

Instead, they say the differences could be down to recent changes in greenhouse gas emissions, solar output, volcanic eruptions and air pollution that weren’t anticipated in the assumptions made by climate modellers.

Overall, the study suggests that while tropospheric warming has not accelerated to the extent that models have predicted in recent years, there’s little evidence that it has slowed down.

Temperatures from satellites

Much of our historical temperature data comes from weather stations, ships, and buoys on the Earth’s surface. Since 1979 temperature records of the atmosphere are also available from satellite-based microwave sounding units (MSU). These measure the “brightness” of microwave radiation bands in the atmosphere, from which scientists can estimate air temperatures.

However, the bands measured by the satellite instruments cannot easily provide the temperature of a specific layer of the atmosphere. Researchers have identified particular sets of bands that correspond to the temperature of the lower troposphere (TLT) spanning roughly 0 to 10 km, the middle troposphere (TMT) spanning around 0 to 20 km and the lower stratosphere (TLS) spanning 10 to 30 km.

Unfortunately, these bands tend to overlap a bit. For example, TMT estimates will include part of the lower stratosphere, while TLT estimates will include some surface temperature. These overlaps matter because different parts of the atmosphere are expected to react very differently to climate change.

When greenhouse gases trap incoming solar radiation, they tend to increase the temperature of the surface and lower atmosphere, and decrease the temperature of the upper atmosphere as less solar radiation is escaping. We see this in satellite observations and data from weather balloons, where the lower stratosphere is cooling while the underlying troposphere and surface are warming.

Because the tropospheric temperature estimates from satellites overlap with part of the stratosphere, they end up combining a bit of stratospheric cooling with tropospheric warming and can underestimate the true rate of warming. To avoid this issue, the new study applies a correction to remove some of the stratospheric cooling from the TMT series. The approach they use for this is described in a previous paper published in the Journal of Climate.

Correcting errors in the data

Dealing with stratospheric contamination is not the only challenge when working with satellite data. Unlike on the surface where there are tens of thousands of individual observation stations, there are only around two to three MSU satellites taking measurements at any given time, and the satellites only last about five-to-ten years before they need to be replaced.

While the satellites are designed to pass over the same part of the earth at the same time every day, that changes as their orbits decay. A satellite that once took the temperature over London at 2pm ten years ago, for example, might now be taking it at 8pm. Changing the observation times has a big effect on the temperatures measured, and researchers need to correct their measurements for this.

Similarly, a replacement satellite might measure temperatures slightly differently from its predecessor. Around the year 2000, for example, the instrument in the satellites was changed to an upgraded version of the sensor. All of these can potentially introduce bias into measurements that need to be addressed.

There are two main groups that process the same underlying MSU data to estimate atmospheric temperatures: the University of Alabama, Huntsville (UAH) and Remote Sensing Systems (RSS). Each group has a different set of assumptions to correct for various issues in the data, and they end up with fairly different results. You can see how the UAH (yellow line) and RSS (blue) figures differ in the chart below – particularly after the year 2000.

Annual global mean middle tropospheric temperatures from RSS and UAH from 1979 through 2016, covering from 82.5 N–82.5 S. No stratospheric adjustments are included as an adjusted UAH dataset is not available. Chart by Carbon Brief using Highcharts.While RSS generally agrees with the rate of warming seen globally in surface temperature records, UAH shows much less warming – including a more pronounced slowdown in temperature rise after 1998. The differences between satellite records are much larger than those between different surface temperature estimates. Co-author Dr Carl Mears, the co-founder of RSS, suggests that:

“In general, I think that the surface datasets are likely to be more accurate than the satellite datasets. The between-research spreads are much larger than for the surface data, suggesting larger structural uncertainty.”

These large uncertainties between satellite datasets somewhat complicate any comparison of tropospheric temperatures with climate models, as it makes it unclear if the disagreement is due to issues in the models or in the observations, and leaves open the possibility that additional corrections to the data may happen in the future.

Comparisons with climate models

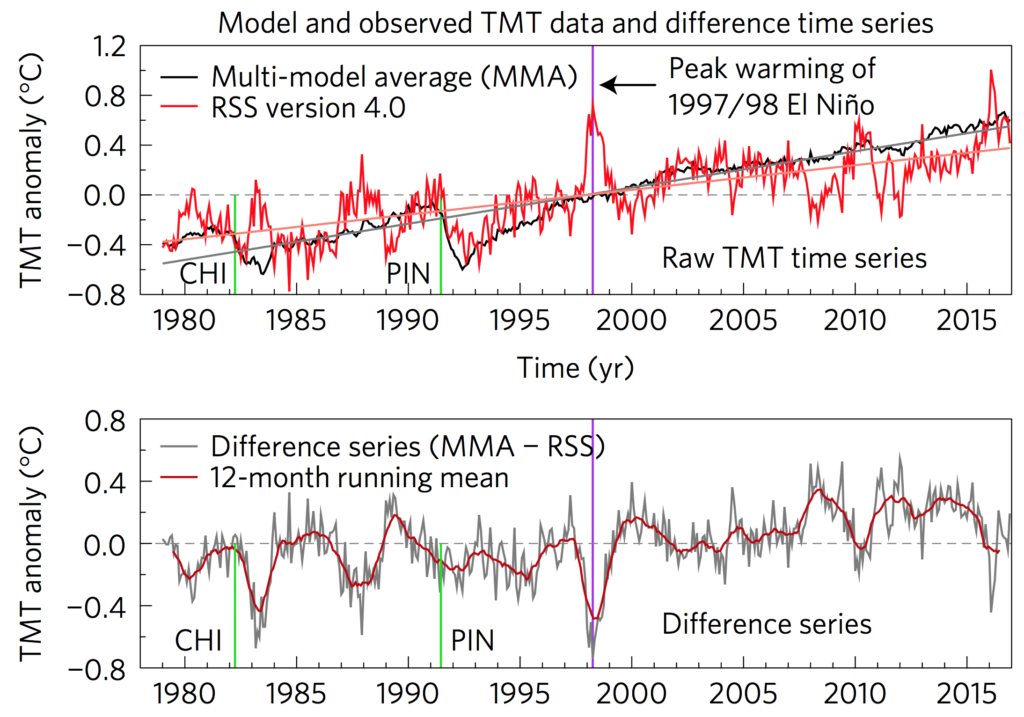

In their paper, the researchers employed a number of different statistical tests to compare climate models and observations of TMT. They corrected both models and observations for stratospheric cooling influence, and compared the two over the period from 1979 through 2016 as shown in the figure below. The upper chart shows the model output and the lower chart shows the observations from RSS.

Top panel shows stratosphere-corrected RSS TMT compared to the CMIP5 multimodel average TMT. Bottom panel shows the difference between the two over time. Source: Santer et al. (2017)

While the rate of warming in the models and observations is pretty close prior to the year 2000, the differences after 2000 are much larger. Some of these differences are explained by short-term natural variability, such as El Niño events, which do not necessarily occur at the same time in the models as in the observations and tend to average out. However, even with this removed from the observations, the researchers find that notable differences remain.

To explain these differences, the researchers tested a number of different possible factors. First, they looked to see if the difference could be explained by longer-term multi-decadal natural variability from El Nino and ocean temperature oscillations that was not captured in the model average.

They found that while natural internal variability can explain most of the relatively small differences between modeled and observed tropospheric warming in the last two decades of the 20th century, but can’t fully explain why model tropospheric warming is larger than in the satellite data during much of the early 21st century.

Second, they looked to see if the difference might be caused by models being too sensitive to CO2. They found no discernable relationship between model sensitivity and their ability to accurately predict tropospheric temperatures over this period.

The conclusion the researchers came to was that the model-observation discrepancy isn’t down to a single factor, but a combination. Specifically, they posit that it is due to a combination of internal variability and that models got some climate forcings wrong in recent years.

Climate models used historic data for factors like greenhouse gas concentrations, solar output, volcanic eruptions, air pollution, and other factors that can affect the climate through 2005 or so, but after that point made assumptions of how these would change in the future. Recent research has suggested that a series of moderate volcanic eruptions, a long and unusually low minimum in the sun’s energy output during the last solar cycle, and an uptick in particulate pollution from Chinese coal-fired power plants have all changed these forcings in ways unanticipated by the modelers.

These forcings will be updated in current modeling effort, called CMIP6, being done in preparation for the next Intergovernmental Panel on Climate Change report. This new generation of models, featuring forcings closer to observations in recent years, will likely show better correspondence with tropospheric temperature observations, but may not be any more or less sensitive to CO2 than the prior generation of models (CMIP5).

According to Dr Gavin Schmidt, director of NASA’s Goddard Institute for Space Studies, who was not involved in the paper, there are even plans to rerun the older CMIP5 generation of climate models with updated forcings to see what happens if those are updated in isolation without changing other factors.

Ultimately, the paper finds that while there is a mismatch between climate models and observations in the troposphere since the year 2000, there is little evidence to-date that the model/observation differences imply that the climate is less sensitive to greenhouse gases. The results suggest that while these short-term differences between models and observations are a subject of great scientific interest, it does not diminish the reality of long-term human-driven warming.

Santer, B. D. et al. (2017) Causes of differences in model and satellite tropospheric warming rates, Nature Geoscience, doi:10.1038/ngeo2973