Roz Pidcock

28.06.2016 | 4:08pmA new paper helps to shed light on one of the biggest questions in climate science: how much the climate will warm in future?

The answer to this depends a lot on something scientists call the “climate sensitivity” – a measure of how much the climate warms in response to greenhouse gases.

Until now, scientists have been grappling with how to reconcile the fact that different ways to estimate the climate sensitivity have, so far, come up with quite different answers.

This uncertainty has never been a reason to question whether climate change will be serious or to delay action to tackle emissions, though it has often be misused by climate skeptics this way.

But a question mark over the value of climate sensitivity has meant that projections of future temperature rise are less precise than scientists would like. It also makes it harder to gauge our chances of staying below a given temperature limit, such as 2C above pre-industrial levels.

A new paper published in Nature Climate Change says there is, in fact, no disagreement between the different methods after all. In reality, they measure different things and once you correct for the fact that the historical temperature record underestimates past warming, the gap closes.

The implications are significant since it suggests we’ve seen around 0.2C more warming than previously thought, says co-author Dr Ed Hawkins in his Climate Lab Book blog.

A sensitive question

The new paper looks at two primary ways of estimating transient climate sensitivity, defined as the amount of near-surface warming resulting from a doubling of carbon dioxide.

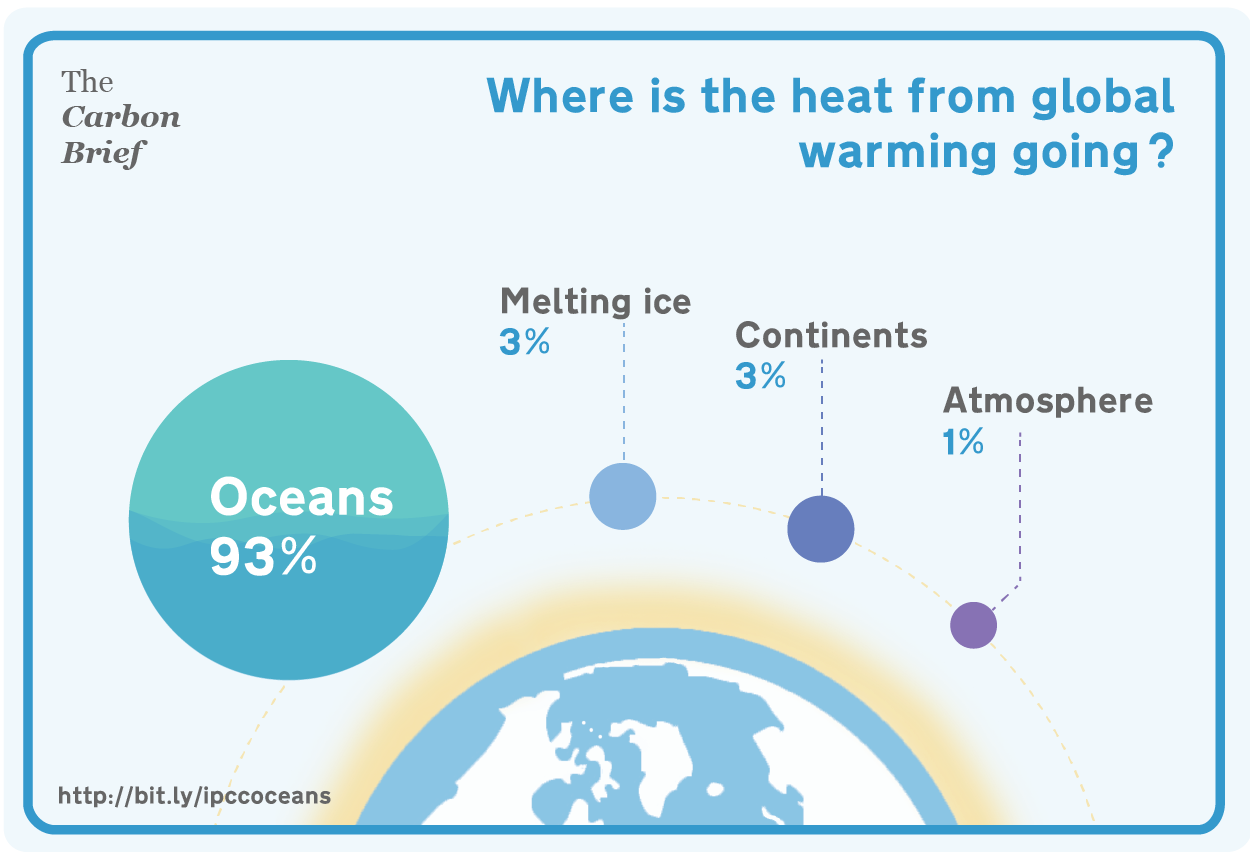

One way is to compare observations of how much the land, ocean, ice and atmosphere have warmed over the industrial period to how greenhouse gases and other factors that influence temperature, known as forcings, have changed in the same time.

But estimates of climate sensitivity from these so-called “energy budget models” tend to be lower than those simulated by climate models, raising the question of whether models are overestimating the scale of the climate response to greenhouse gases.

Climate models work differently, by calculating the warming expected from greenhouse gases and all processes in the climate system known to amplify or dampen the speed of warming, known as feedbacks.

In a News and Views article accompanying the new research, Prof Kyle Armour from the University of Washington, says:

“Apples to oranges”

The new study argues that issues with the way global temperatures have been measured historically mean that they underestimate the total warming over the industrial period.

First, there are places in the world where temperature data doesn’t exist. Importantly, the most poorly sampled areas are also the fastest warming. In the HadCRUT4 dataset, it is well known that gaps exist over remote parts of the Arctic, for example.

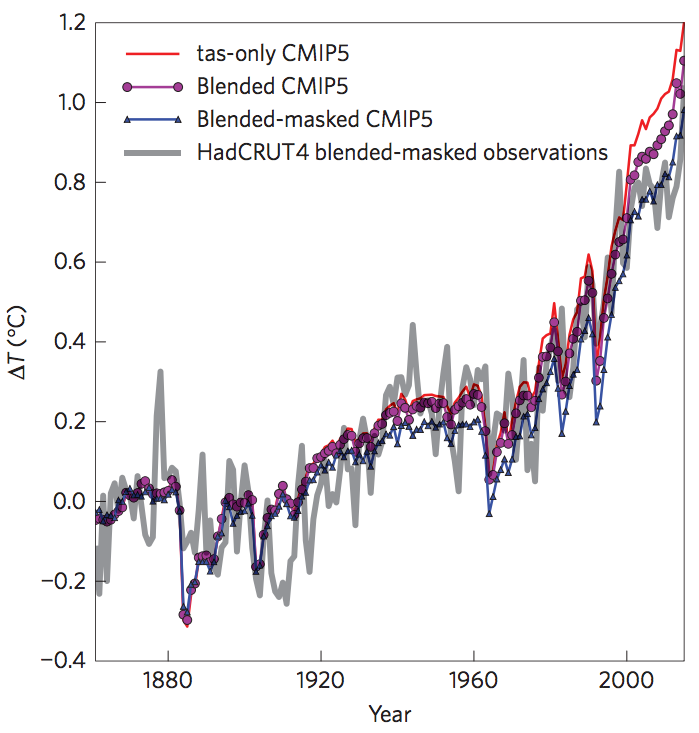

By reducing the geographical coverage in the models to match the observational record – a process known as “masking” – the scientists worked out that imperfect geographical coverage effectively pushed observation-based estimates of warming down by about 15%.

Secondly, the techniques scientists use for making temperature measurements have advanced over time. Hawkins says:

But “blending” these different – and often sparse – measurements to calculate a global average temperature has unwittingly caused problems, Armour writes:

Since climate models simulate only air temperatures, this is not a like-for-like comparison.

Adapting the models to account for the difference between seawater and near-surface air temperatures in the models allowed the scientists to see what difference the instrumental differences make. Hawkins explains:

Doing so, suggested that the instrumental changes within the HadCRUT4 dataset underestimated global temperature change over the industrial period by a further 9%, to a total of 24% when combined with the effect of incomplete observations.

The difference this is not trivial, says Hawkins:

Average temperature change relative to 1861–1880 for CMIP5 models with spatially complete air temperature (red), after “blending” and “masking” to account for incomplete observations and use of historical sea surface temperatures (blue). Credit: Dr Ed Hawkins, Climate Lab Book.

Based on their calculations, the scientists’ best estimate for how much the observational record underestimates transient climate sensitivity is 1.7C, with a range of 1.0-3.3C.

Scaling up previous estimates based on the imperfect observational record brings them in line with the best estimate from models of 1.8C, with a range of 1.2-2.4C. Or Armour says:

Prof Piers Forster from the University of Leeds is cautious about overinterpreting the results too soon. He tells Carbon Brief:

In other words, questions about climate sensitivity are complicated and won’t be solved by any single bit of research.

Further questions

The impact that gaps in the temperature record have on climate sensitivity is likely to diminish in future as observational coverage improves, the study notes.

But the problem will still remain for the historical period unless scientists can rescue additional, currently undigitised, weather observations, says Hawkins.

The study raises another interesting point: which global mean temperature is relevant for informing policy? The imperfect record based on observations, or the model record with gaps filled in? On this point, Hawkins says: